The past decade has seen the rapid development of the online newsroom. News published online are the main outlet of news surpassing traditional printed newspapers. This poses challenges to the production and to the consumption of those news. With those many sources of information available it is important to find ways to cluster and organise the documents if one wants to understand this new system. Traditional approaches to the problem of clustering documents usually embed the documents in a suitable similarity space. Previous studies have reported on the impact of the similarity measures used for clustering of textual corpora. These similarity measures usually are calculated for bag of words representations of the documents. This makes the final document-word matrix high dimensional. Feature vectors with more than 10,000 dimensions are common and algorithms have severe problems with the high dimensionality of the data. A novel bio inspired approach to the problem of traversing the news is presented. It finds Hamiltonian cycles over documents published by the newspaper The Guardian. A Second Order Swarm Intelligence algorithm based on Ant Colony Optimisation was developed that uses a negative pheromone to mark unrewarding paths with a “no-entry” signal. This approach follows recent findings of negative pheromone usage in real ants . In this case study the corpus of data is represented as a bipartite relation between documents and keywords entered by the journalists to characterise the news. A new similarity measure between documents is presented based on the Q- analysis description of the simplicial complex formed between documents and keywords. The eccentricity between documents (two simplicies) is then used as a novel measure of similarity between documents. The results prove that the Second Order Swarm Intelligence algorithm performs better in benchmark problems of the travelling salesman problem, with faster convergence and optimal results. The addition of the negative pheromone as a non-entry signal clearly improved the quality of the solutions. The application of the algorithm to the corpus of news of The Guardian creates a coherent navigation system among the news. This allows the users to navigate the news published during a certain period of time in a semantic sequence instead of a time sequence. This work as broader application as it can be applied to many cases where the data is mapped to bipartite relations (e.g. protein expressions in cells, sentiment analysis, brand awareness in social media, routing problems), as it highlights the connectivity of the underlying complex system.

Peer Assessment in Architecture Education

Peer Assessment in Architecture Education – Brno – ICTPI'14 – Mafalda Teixeira de Sampayo, David Sousa-Rodrigues, Cristian Jimenez-Romero, and Jeffrey Johnson

The role of peer assessment in education has become of particular interest in recent years, mainly because of its potential benefits in improving student’s learning and benefits in time management by allowing teachers and tutors to use their time more efficiently to get the results of student’s assessments quicker. Peer assessment has also relevant in the context of distance learning and massive open online courses (MOOCs).

The discipline of architecture is dominated by an artistic language that has its own way of being discussed and applied. The architecture project analysis and criticism goes beyond the technical components and programme requirements that need to be fulfilled. Dominating the architecture language is an essential tool in the architect’s toolbox. In this context peer assessment activities can help them develop skills early in their undergraduate education.

In this work we show how peer assessment acts as a formative activity in architecture teaching. Peer assessment leads the students to develop critical and higher order thinking processes that are fundamental for the analysis of architecture projects. The applicability of this strategy to massive open online education systems has to be considered as the heterogeneous and unsupervised environment requires confidence in the usefulness of this approach. To study this we designed a local experiment to investigate the role of peer experiment in architecture teaching.

This experiment showed that students reacted positively to the peer assessment exercise and looked forward to participating when it was announced. Previously to the assessment students felt engaged by the responsibility of marking their colleagues. Subsequently to the first iteration of the peer assessment, professors registered that students used elements of the qualitative assessment in their architecture discourse, and tried to answer the criticisms pointed to their projects by their colleagues. This led their work in directions some hadn’t considered before.

The marks awarded by the students are in good agreement with the final scores awarded by the professors. Only in 5 cases the average score of the peer assessment differed more than 10% from marks given by the professors. It was also observed that the professor’s marks where slightly higher than the average of the peer marking. No correlation was observed between the marks given by a student as marker and the final score given to that student by the professors.

The data produced in this experiment shows peer assessment as a feedback mechanism in the construction of a critical thought process and in the development of an architectural discourse. Also it shows that students tend to mark their colleagues with great accuracy. Both of these results are of great importance for possible application of peer assessment strategies to massive open online courses and distance education.

Systems that are Sensitive to Initial Conditions

Systems that are sensitive to initial conditions are those that whose trajectories diverge in ways that are not predictable. One of those systems is the chaotic Lorenz attractor, but even simpler systems can show dependence on the initial conditions.

In this example the diverging behaviour is obtained by the totally deterministic algorithm 2 times modulo 1 or (2x%1) that you can even try out in your calculator.

- take a random number between 0 and 1 (e.g. 0.823)

- multiply that number by 2 (e.g. 2*0.823=1.646)

- calculate the modulo 1 (%) of this value (e.g. 1.646 % 1=0.646). modulo 1 corresponds in this case to make the integer part of the number 0.

- use the result obtained in 3. and repeat from 2.

Now start with a second number very similar to the first (e.g. 0.825) and repeat the process (in the animation the initial difference is just 0.01% between the two values).

You’ll see that for the first iterations the calculations (the trajectory) are similar but suddenly they jump all around. After some iterations you can’t predict the behavior of the second trajectory, even if you know the first trajectory. This clearly shows that the system is sensitive to initial conditions. A very simple and strange system indeed.

R Tip: define ggplot axis labels

Formatting text and labels in ggplot or ggplot2 axis is easy. A common task when producing plots for publication is to replace default labels. Default labels in axes tend to reflect the name of variables used and sometimes these are not the most descriptive labels. At least not when you are publishing the plots in a scientific journal. So let’s try to break down some ways to personalise ggplot plot axes.

Quick Navigation:

- The default ggplot axis labels

- Setting axes labels in ggplot with scales

- Formatting labels text for size and rotation?

For this formatting example I’ll use the movies dataset that is available in R. First thing we need to do is to load ggplot2 library and then the movies dataset

library(ggplot2) data(movies) |

The default ggplot axis labels

Traditionally the labels are set in the axis directly by ggplot from the aesthetics selected e.g.:

p0<-ggplot(data=movies, aes(x=year)) p0<-p0+geom_point(aes(y=rating))+geom_smooth(aes(y=rating)) p0 |

To make ggplot axes’ labels different we can use xlab and ylab. This defines x and y axis in ggplot easily.

p0+xlab('The glorious years of the movies')+ylab('The public ratings') |

Setting axes labels in ggplot with scales

p0+ scale_x_continuous('The glorious years of the movies (with scales)')+ scale_y_continuous('The public ratings (with scales)') |

Also worth investigating is the labs function that allow the change of the axes and the title e.g.:

p0+labs( x='The glorious years of the movies (with labs)', y='The public ratings (with labs)' ) |

Formatting labels text for size and rotation?

Ggplot can change axis label orientation, size and colour. To rotate the axes in ggplot you just add the angle property. To change size ou use size and for colour you uses color (Notice that a ggplot uses US-english spelling). Finally, note that you can use the face property to define if the font is bold or italic.

p0 + xlab('The Years of Cinema')+ ylab('Public Ratings')+ theme( axis.text.x=element_text(angle=90, size=8), axis.title.x=element_text(angle=10, color='red'), axis.title.y=element_text(angle=80, color='blue', face='bold', size=14) ) |

The formatting of the text in the labels is a bit counter intuitive because it uses a slightly different nomenclature. The formatting is done with the theme function and by defining element_text’s with the wanted format. In the example above the axis.text.x defines the ticks format and the axis.title.? define the labels format.

A good way to learn all the elements that a ggplot theme can format can be obtained from the help menu by entering ?theme. These examples are just scrapping the surface of what you can do but hope they can get you started in formatting text size and orientation inside ggplot plots.

Side Note: Did you noticed how crappy the movies from the 70s, 80s and 90s were?

Is the singularity cost prohibitive?

The idea that a technological singularity is going to hit us any time in the future and that at that moment in time we’ll be presented with (now) unimaginable artificial intelligence (or at least greater than human intelligence, and that’s not saying much because on its own greater-than-human-intelligence is full of caveats) is a recurrent buzzword in the semi-circles of AI, technophiles and geeks. But in the end, is it?

Alan Winfield wrote recently a paper where he explores the cost (energetic cost) of evolving artificial intelligent human-equivalent AIs (or even more General Artificial Intelligence) and from the parallelism that he traces with the evolution of complexity of artificial entities it is clear that the artificially evolved entities that will be responsible for the singularity wont’t come to be by an effort of exhaustive evolutionary computation.

So, are we heading to a technological singularity?

I don’t think so. Mainly because it is my belief that in many aspects, the singularity is more an technological horizon than a fixed point in time. My understanding is that the basic idea of what a super-intelligence is is wrong.

The same way the technologies and knowledge of the XXI century would seem super-intelligent to someone of the XII century, what we are saying about super-intelligence of the singularity might in practice be our XXI century view of something in the future. So it might be that the singularity is nothing more than a technological horizon.

If in the XII century this horizon was maybe of 600 years, it might be that now we can’t really predict the future, with any kind of certainty, further then 50 years. This doesn’t make the horizon collapse to zero in the future. It might be the case that the singularity behaves like the speed of light. You can get very close to it without ever reaching it. You might be able to bring down the technology horizon to a few years, months or even days, but it will always be something unreachable like tomorrow is always 24h away.

The subject of singularity is of interest, mainly because of the debates it generates and because the idea forces us to us one of our most powerful tools to create new things: imagination.

Global Brain: Web as Self-organizing Distributed Intelligence – Francis Heylighen

OVERVIEW: Distributed intelligence is an ability to solve problems and process information that is not localized inside a single person or computer, but that emerges from the coordinated interactions between a large number of people and their technological extensions. The Internet and in particular the World-Wide Web form a nearly ideal substrate for the emergence of a distributed intelligence that spans the planet, integrating the knowledge, skills and intuitions of billions of people supported by billions of information-processing devices. This intelligence becomes increasingly powerful through a process of self-organization in which people and devices selectively reinforce useful links, while rejecting useless ones. This process can be modeled mathematically and computationally by representing individuals and devices as agents, connected by a weighted directed network along which “challenges” propagate. Challenges represent problems, opportunities or questions that must be processed by the agents to extract benefits and avoid penalties. Link weights are increased whenever agents extract benefit from the challenges propagated along it. My research group is developing such a large-scale simulation environment in order to better understand how the web may boost our collective intelligence. The anticipated outcome of that process is a “global brain”, i.e. a nervous system for the planet that would be able to tackle both global and personal problems. via Summer School in Cognitive Sciences 2014, Web Science and the Mind

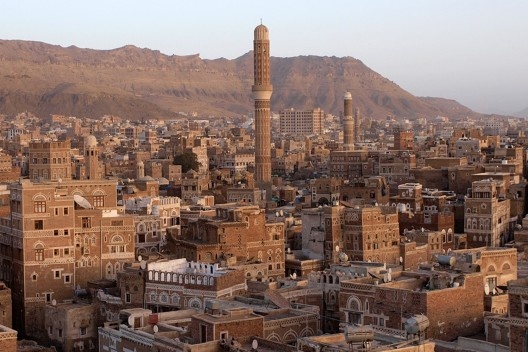

Os regulamentos da cidade islâmica

As leis islâmicas al-fikh abrangem a organização urbana das cidades islâmicas; não segue à risca as imposições de um plano, defende os interesses da família, e revela um modo autossuficiente de fazer cidade, sustentado por suas próprias leis. via arquitextos 169.04 oriente: Os regulamentos da cidade islâmica | vitruvius.

Justin Reich on MOOCs and the Science of Learning

Millions of learners on platforms like edX and Coursera are generating terabytes of data tracking their activity in real time. Online learning platforms capture extraordinarily detailed records of student behavior, and now the challenge for researchers is to explore how these new datasets can be used to advance the science of learning.In this edX co-sponsored talk Justin Reich — educational researcher, co-founder of EdTechTeacher, and Berkman Fellow — examines current trends and future directions in research into online learning in large-scale settings. via Education Week